About the project

Girls Code It Better is an Italian project trying to bridge the gender gap in STEM subjects, similar to the after-school clubs organized by Girls Who Code.

The class is composed of 14 girls with an average age of 13. Half of them already had coding or technology classes. Lessons are 3 hours long, weekly.

Views, thoughts, and opinions expressed here belong solely to the author, and not necessarily to the author’s employer, organization, committee or other group or individual.Going in blind

I deliberately avoided to prepare the lesson and/or generally outline the topics beforehand, so I threw myself in this without any idea on how to lead the 3 hours lecture. Everything I had in mind was to start getting to know what the kids thought coding was and then try to build something from it.

After a brief introduction and a round of names and presentations (~5 minutes) the other teacher gave me the floor.

I started asking what “Coding” and “Programming” was, listening to everyone having an hand up, started speaking up, mumbling something or simply pointing out at who had a I kinda-ish have an idea but not really sure or whatever I may as well shut up look.

Time factor

Programming was basically the same as remote controlling something. They had the same features. I tried to make them discriminate this concepts, asking about what constituted “programming” and what “giving commands”.

This was considered “programming”:

- Blockly/Scratch precedent experiences,

- Drawing a path a robot will follow,

- Camera Timer,

- A/C.

While this was “controlling”:

- TV Remote,

- RC Cars.

So, the first discriminating point appeared: the time factor. Programming allowed to do a sequence of predefined and fixed activities in the future, while “controlling” was something with an immediate effect.

Reverse engineering WhatsApp and smartphone “routines”

At this point I took smartphones as example and tried to make them describe some of the behaviours they exhibit, trying to introduce the choice factor. This was pretty easy and starting to ask if they saw the same actions every time in their phones (UI, Settings, WhatsApp) or if there was different results or screens.

Things were different, and those perceived differences were determined by choices made by user beforehand or during the activity!

Some examples:

- Notification delivery. Type of sound, volume. How did the phone “decided” what to show and how? How you are able to edit those preferences? How they are stored?

- WhatsApp mechanics. This was both surreal and funny as they could describe exactly how WhatsApp showed ticks (Show Read receipt setting), how things exactly worked when someone was blocked or when you are the blocked. They even knew how ticks/views worked in the Stories tab.

(The Spam and cyberbullying concept popped out).

Data and Storage

Letting them reason how WhatsApp showed names of contacts, how it “remembered” tones, where it was stored if someone was blocked, if a message was read and such allowed me to introduce the concept of a “Dictionary of preferences” which saves and has everything you said to the application, allowing to be consulted at different points in time, when needed to make decisions.

At this point they understood that opening a chat or receiving a notification actually implied the application had to read those preferences and behave differently based on that.

We reached the point where we discriminated 3 types of “preferences”:

- Accessible choices. How are they “exposed”?, is everything “free” or are there options with a limited set of values? Constraints on fields?

- Blocked choices. E.g. Apple decides how many tries to have to PIN unlock, you can’t decide to have 5 tries or 10, while other models had this possibility “exposed”,

- Indirect choices. E.g. : iOS makes the clock black if the wallpaper is very light, while it’s white when the wallpaper is dark.

Flowcharts

Devices were “mere executers”. This seemed clear and the concept that every difference - has - to be triggered by different conditions popped out many times. I started trying to formalize how choices were made by drawing how the system UI asked to set a new screen lock, introducing the concept of “Actions” and “Choices” in a kinda-flowchart-diagram notation.

“Setting a screen lock” and “Asking for a code” activities

We then proceeded to formalize, one intervention at the time, how different the UI is giving choices to set the screen lock. The main appeared choices were:

- Previous password check (if any),

- Type of screen lock and subsequent view/keyboard given (PIN, Pattern, passphrase),

- Confirmation prompts,

- Confirm dialogs bringing you at the start of the process.

We walked paths together trying to be the “executors” of those programmed paths. We also tried to understand what events could actually fire the this activity (Factory reset/first use, Settings -> Change screen lock).

We did the same with the “Asking for a code” activity, focusing how what events could trigger this activity (lock/unlock phone, changing the password, rebooting) which also gave us the change to reason what “locked” and turned off meant.

Volume control

(We are in the last hour now, trying to give them practical things to try).

I asked what kind of other process they can observe in smartphones. Someone mentioned the volume control routine, which behaves differently if you press a button or another one (Volume + and - physical buttons).

I thought this was simple enough to be tackled using the diagrams by them and gave the task to “describe” how the volume changes with the flowchart notation we just used. They worked in groups of 2 and after 10~ minutes each group produced a diagram with a “Choice” element, based on what UI or physical button was pressed, with some of them even specifying the increment/decrement factor based on the “press” duration.

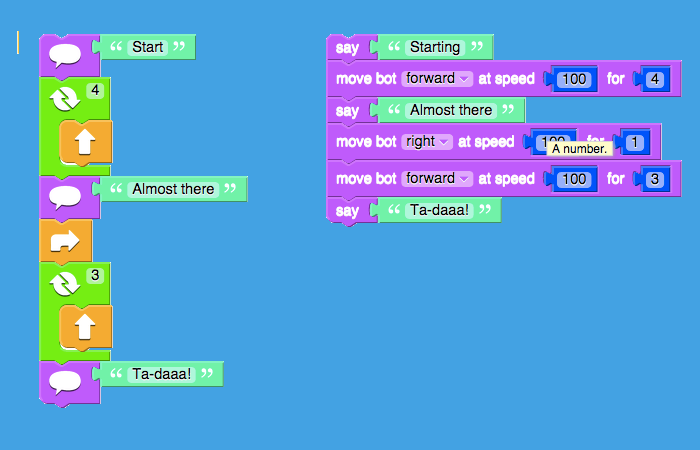

On Scratch

Two groups had previously used Scratch so we tried to give them the task to code this process using blocks, with “volume” being a variable. With some help on how to describe the “decrement” they both succeeded on producing a working event-based (keyboard presses) program which showed a value decrementing or incrementing based on what was pressed.

The solutions were a bit different: one group used the Change x by y block, trying putting -1 in y, while the other other finished using Change x to y block putting x - 1 in y.

Final considerations

I had a strong feeling that they felt tested and that a lot of questions had a right answer or particular purpose. After me and the other teacher said (and enforced) a couple of times the fact that there weren’t more correct or correct answers at all, they seemed more relieved and started trying more aggressively and taking more wild guesses and long shots. This helped. A lot.

Even if the lesson was in a difficult time slice (2:30-5:30 PM) they were always careful and I’ve never caught anyone staring off in 3 hours (this may had set an high bar for me?). Questions and observations were always on point.

At some point they started asking about some behaviours in applications they used daily they couldn’t trace back to any process they could describe and we tried to tackle them together, trying to find variability or split the observed behaviour.

We also didn’t have any “proper” break between activities and the completely theoretical part lasted 2 hours. I was warned about having to give a break asking feedback and generally having a proper “practical” activity every ~20 minutes. This happened but in a “wrong” and more distributed way along the 2 hours, so the teacher said it was better to let new concepts cool down in a more stable and rigorous way.

What worked

- Giving an idea of what programming was.

- Starting to explain the flowcharts and how to use them as tool implementing computational thinking: tackling and describing some behaviour recognized in programmable devices, focusing on choices and factors in those choices.

- Introducing computational thinking and giving them a tool to formalize it.

What didn’t

The first three items are result of the other teacher or external feedback.

- I shouldn’t expect the same progression and I shouldn’t expect everyone to be at the same point anytime.

- Try to leave more time and space to let them engage in discussions and expose opinions on what we are saying. This could also serve as “relieve” or break time.

- Each 20 minutes stop introducing new ideas and give them time to absorb the concepts, asking for examples or letting them apply these concepts in practical means.

- My speech pattern. I frequently found myself correcting the lexicon I was about to use, since I was trying to use complex and technical terminology not suitable for this public.

- I was supposed to ask what they expected from the course and what they thought was about. Totally missed that. Which also leads to the next point.

- My memory. I can’t remember names. Or things. I could link faces with previous considerations they made, though. Which is pretty cool.

- My emoji knowledge. I was asked why it seemed that WhatsApp updates brought new emojis too, even if the keyboard and the WhatsApp are different components and I wasn’t able to reason why was this happening other than trying some guesses. Apparently emojis updates are rolled out with WhatsApp updates on Android. It seems that they are bundled with Android updates, but Google lets WhatsApp “enable” them when WhatsApp wants, even with (some) older Android versions, following some beta tests. On the other hand, Apple ships and enables new Emojis with iOs updates and it’s unrelated to the WhatsApp version. Actually, Unicode version support and WhatsApp emojis set are also factors in this […]. TIL.